Menu

- 130 King Street West, Suite 1800

- P.O. Box 427

- Toronto, ON, Canada M5X 1E3

- (416) 865-3392

- info@triparagon.com

EXECUTIVE SUMMARY

Why data centre uptime is should be to be your top priority.1 Ensuring the uptime of mission-critical applications has never been more important. Enterprises depend on third-party service provider infrastructure services such as Tri-Paragon Inc. to keep their businesses going. An in-depth Infonetics Research survey found that companies were losing as much as $100 million per year to downtime.2

Although the consequences of outages vary by industry, organizations agree that recouping lost revenue and rebuilding corporate reputation can be difficult. While natural disasters and equipment failures cannot be avoided, their impact on downtime can be mitigated through planning and automation. Contact Tri-Paragon to learn about their Uptime Reassurance Strategy and Assessment Program to assist you with identifying your downtime risks and how best to mitigate them.

Tri-Paragon aids in the following areas to assist in achieving your uptime objectives:

Please give us a call at 416 865-3392 or send us an email at info@triparagon.com

NOW LET US EXAMINE THE COLD HARD FACTS OF UPTIME

FACT #1: THE EFFECTS OF DOWNTIME ARE EXPENSIVE.

There was a time when virtualized servers and applications were used solely for non-mission-critical environments. But now, especially with cloud computing adoption on the rise, mission-critical applications that businesses rely on are running on virtualized environments. Leading organizations understand that any amount of downtime can be expensive even if virtualization offers some built in protections against it.

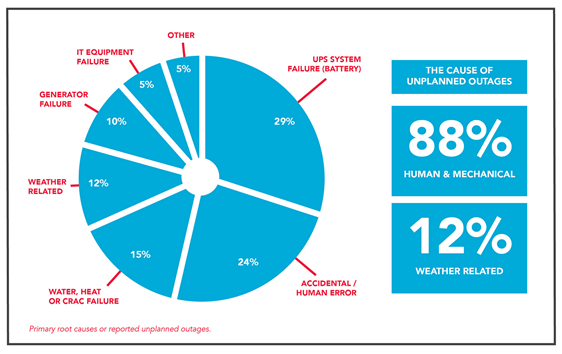

According to an Infonetics’ survey,2 the most common causes of downtime are:

Organizations reported that they experienced an average of 2 outages and 4 degradations per month, lasting for about 6 hours for each event. These factors have caused companies to lose as much as $100 million per year to downtime. Unplanned downtime is as much a business issue as it is an IT issue; it never produces revenue for the companies experiencing it but certainly contributes to revenue loss.

” Fixing the downtime issue is the smallest cost component. The real cost is the toll downtime takes on employee productivity and company revenue, illustrating the criticality of ICT infrastructure in the day-to-day operations of an organization. “

{Matthias Machowinski, Directing Analyst for Enterprise Networks and Video, Infonetics Research, 20152

1 http://internetofthingsagenda.techtarget.com/news/4500256105/Data-centre-uptime-pressure-mounts-as-IoT-takes-hold

2 http://www.infonetics.com/pr/2014/Cost-Server-Application-Network-Downtime-Survey-Highlights.asp}

In another survey, IDC3 examined the annual impact of application downtime across Fortune 1000 organizations. IDC found that:

Reputation and loyalty

The biggest cost of downtime is how it affects customer satisfaction. Would you still have your customers’ loyalty after recovering from the unplanned downtime?

Employee productivity

Morale is challenged by downtime. Employees who are trying to resolve the problem will show extremely high stress levels, which may result in human error further compounding the complexity of the issue.

Regulatory and contract compliance

Substantial penalties and fines can be imposed if you fail to fulfill the conditions of an SLA. It is crucial to know the true cost of downtime so that organizations can determine what kind of backup and disaster recovery investments make sense for its business.

”As data centres continue to evolve to support businesses and organizations that are becoming more social, mobile, and cloud-based, there is an increasing need for a growing number of companies and organizations to make it a priority to minimize the risk of downtime and commit the necessary investment in infrastructure technology and resource.“

{Peter Panfil, Vice President, Global Power, Emerson Network Power, 20134

3 http://info.appdynamics.com/rs/appdynamics/images/DevOps-metrics-Fortune1K.pdf 4 http://www.emersonnetworkpower.com/en-US/About/NewsRoom/NewsReleases/Pages/Emerson-Ponemon-Cost-Unplanned-DataCentre-Outages.aspx}

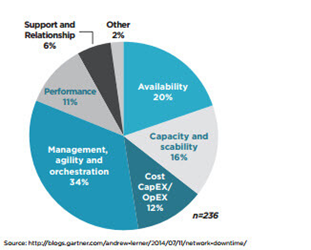

The demand for 24/7 access to IT services and applications is increasing. Mission-critical production environments must be up 24 hours a day, every day of the year. A Gartner survey reveals that availability is the most important aspect of nearly every network.

Other system requirements like performance, scalability, management, and agility require the network to be always online.5

Application uptime and system performance depend on a variety of factors, including:

Since more businesses are embracing the Cloud, data centres and carriers are constrained to re-architect their systems and applications to keep up with their clients’ requirements.

Power spikes, sags, and outages require redundant critical power and cooling components such as UPS modules, chillers or pumps, and engine generators. It is important to have sustained power availability for reliability.

Uptime requires a degree of equipment redundancy. Its requirement for a basic server is N+1 while higher levels of uptime requires more items of redundant equipment (N+M).

All of an edge data centre’s facility should be certified to the highest industry standards and compliance requirements including certifications with HIPAA, PCI DSS, SSAE 16, SOC 2 and ISAE 3402.

The data centre must be highly scalable, and systems need to be faster every year. Ensuring uptime is critical in providing better customer experience; as customers begin to lose their attention, organizations risk the loss of customers, reputation, and profitability.

6 http://www.idc.com/getdoc.jsp?containerId=US40546615

FACT #3: UPTIME IS HARD TO SUSTAIN.

Even with the help of modern technology, data centre operations are still hampered by sporadic and persistent issues that demand immediate attention. Of all these issues, unplanned downtime is the most pressing. Businesses should ensure seamless end-user experience and line-of-business operations.

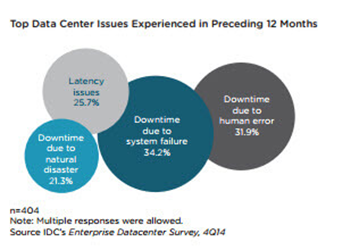

As shown in the following figure, downtime is the most common issue faced by data centre operators. The respondents cited that downtime is caused by system failure, human error, and natural disasters.

It’s hard to keep your operations up if you’re using an old and unstable IT infrastructure with inadequate monitoring tools. On the other hand, an efficiently modeled data centre that needs continuous process improvement can also contribute to system failure. Eliminating these inefficiencies in your system can greatly help in minimizing unplanned downtime. Preparing a data centre strategy and roadmap eliminates unexpected expenses due to system failure by proactively planning for continuous improvement.

The reality is that system downtime can often be correlated to human error. Data centre operators must have flawless processes and execution to ensure the systems are maintained, repaired, tested, and monitored 24/7. Oftentimes, downtime is a result of multiple breakdowns in processes or human execution. An assessment of your data centre processes identifies and resolves gaps which can lead to unexpected downtime.

Natural disasters are inevitable—even if it’s “man-made” like major power grid outages. Having a robust disaster recovery plan in place can prepare you for the inevitable. Implementing and validating your DR strategy and processes can go a long way to prevent unnecessary outages due to natural disasters.

FACT #4: SUSTAINING 100% UPTIME IS NOT MISSION IMPOSSIBLE.

Maintaining 100% uptime can be achieved through detailed planning, system maintenance and management by an experienced staff of data centre professionals. While automation and systems redundancy are important, experienced data centre professionals are the most important ingredient to maintaining uptime.6

IDC’s survey on The Problems of Downtime and Latency in the Enterprise Datacentre mentioned these essential guides to ensure 100% uptime6:

1. Prevent system failure.

To have an efficient and reliable data centre, regular monitoring and updating of critical system infrastructure is a must. Automation and centralized monitoring solutions can also help in preventing system failure.

2. Reduce human error

Documenting and following standard methods and procedures is critical to maintaining uptime. A recurring assessment process must be in place to validate the standard methods and procedures to ensure they are being updated as necessitated. Oftentimes, data centre operators will become overly dependent on systems to maintain uptime. The reality is that more oftentimes than not, a human error is involved in a system or process breaking down. Operators can reduce downtime by ensuring experienced professionals are monitoring, maintaining, and managing the power, cooling, and infrastructure 24/7.

3. Ensure robust disaster planning.

Having a disaster recovery plan in place for potential natural disasters and other impacting events is critical. Being proactive, such as ensuring that backup diesel generators are tested regularly (and for an adequate duration) and taking precautionary steps ahead of anticipated natural disasters can minimize downtime.

Following this critical methodology can ensure that you stay online when crisis does strike. Planning, automation, and system redundancy are important strategies in reducing or eliminating downtime. Managing redundant and resilient systems with an experienced technical staff can ensure that operations are always up and operating.

COLOCATING should be WITH A REDUNDANT AND COMPLIANT DATA CENTRE.

Colocation offers services that can augment your existing systems. Most colocation facilities are designed with a resilient critical system, a redundant battery backup and cooling system, and with a scalable infrastructure that you can take advantage of. Leading colocation sites offer 100% uptime with the help of their robust infrastructure. Even though this may provide a level of security around uptime there is still a need to have a resilient backup and disaster recovery plan in place for workloads assigned to a colocation environment.

CONCLUSION

These cold, hard facts about downtime demonstrate the importance and challenge of keeping applications up and running with 100% availability. Downtime has serious consequences and can cost businesses in lost revenue, lost customers, and brand loyalty.

Tri-Paragon aids in the following areas to assist in achieving your uptime objectives:

Please give us a call at 416 865-3392 or send us an email at info@triparagon.com